Image Apps Like Lensa AI Are Sweeping the Internet, and Stealing From Artists

Amy Stelladia felt exhausted when she found out her art had been stolen.

The Spanish illustrator, who has worked for the likes of Disney and Vault Comics, told The Daily Beast that her life as an artist has been a “constant battle to stay relevant, valuable, and visible.” So when she found out that some of her old work was being plundered, she wasn’t completely shocked—though she was a little surprised to find that it was old fanart from Deviantart that was taken.

In fact, Stelladia is one of many victims of what is arguably the biggest art heist in history. Their work wasn’t taken by a team of thieves in an Ocean’s Eleven-style caper. Rather, it was quietly scraped from the web by a bot—and later used to train some of the most sophisticated artificial intelligence models out there, including Stable Diffusion and Imagen.

“[Artists] are often in the position to justify our profession, and every time something like this comes… we feel angry, frustrated, and exhausted,” she said. “After everything that’s been going on, I mainly feel exhausted and powerless, like most of the constant effort I put on my work just goes down the drain.”

Now, Stelladia’s art has gone viral for all the wrong reasons. Over the last few weeks, AI image generators like Lensa and MyHeritage Time Machine, which have been trained using Stable Diffusion, have trended on TikTok and Instagram. The generators turn photos of users into stylized art pieces or even put them into different time periods (e.g. as a cowboy or an 18th-century French aristocrat).

However, the datasets used to train the AI contained hundreds of millions of images plucked from different web pages—including artwork from artists like Stelladia, without their knowledge or consent. Since both Lensa and Time Machine cost money to use, this means that private companies are making money off of these artists’ own work. And the artists don’t see a cent.

“They are meant to compete with our own work, using pieces and conscious decisions made by artists but purged from all that context and meaning,” Stelladia explained. “It just feels wrong to use people’s life work without consent, to build something that can take work opportunities away.”

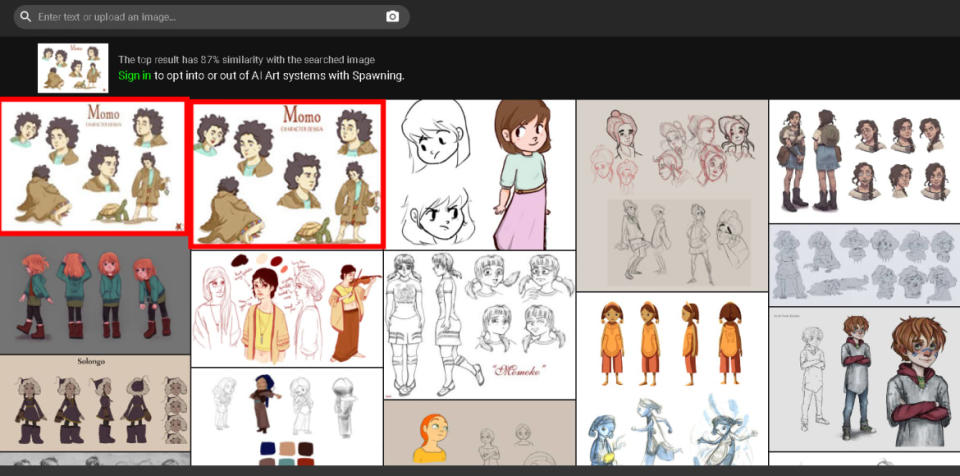

Stelladia learned about her art being used this way after discovering a website called HaveIBeenTrained.com. The site comes from Spawning, an art activist group that’s building tools for artists to learn whether their work has been taken to train large AI models. The group also provides methods to opt out of these datasets.

Ran a little test on "Have I been trained?" to see if my art was on LAION's dataset. Of course, most of my artwork posted years ago on Deviantart and Behance was.

— Amy Stelladia ✏🏳️🌈 (@Stella_di_A) December 5, 2022

“I think consent is fundamental and will become more important over time,” Mat Dryhurst, a Berlin-based artist and co-creator of Spawning, told The Daily Beast. He and his partner Holly Herndon have been leading AI artists since 2016. The pair produced an album under Herndon’s name in 2019 that used an AI “singer” to provide vocals on several tracks. More recently, they helped develop Holly+, a “digital twin” of Herndon that allowed her to deepfake her own singing.

However, Dryhurst said that the datasets that they used in order to produce these vocals were trained using the voices of consenting individuals. So while they’re major proponents of AI art and artists using digital tools as part of their process, he described them as a “consent absolutist” when it comes to AI.

HaveIBeenTrained.com offers an opportunity for artists to reclaim their digital information from private companies that want to make an easy dollar off of their hard work. To create this tool, Spawning partnered with LAION, an open network AI non-profit that built many of the datasets on which the generators were trained, to provide an easy way for artists to request that their work be scrubbed from the sets directly. It can help artists attempt to reclaim their work in a way that they otherwise might not have been aware of.

Stelladia used HaveIBeenTrained.com to discover that her old fan art had been used to train AI image generators without her permission.

“We’re explicitly concerned in what can be done with consensual data relationships, and building tools to make that vision possible,” said Dryhurst. “That’s our main focus fundamentally. I’m not a complete alarmist about this, but I think it’s the right thing to do and sets the field off on the right foot.”

However, Dryhurst admits that it’s not a perfect solution. The unfortunate reality is that there are many different datasets out there that do the exact same thing. Just because LAION is willing to help doesn’t mean that everyone is going to be on board. After all, data is the most valuable resource of the digital age. Having more data means that AI can become more sophisticated—generating everything from images, to music, to essays, and even videos.

“The bad news is that the tools that are being used right now to really personalize this stuff are open code, so there’s many other services that are using them,” Dryhurst said. “These are open tools that will persist and exist.” This fact has only served to harden Spawning’s resolve to help artists reclaim their data. The more artists are aware of their art being used in this way, the more they can take a stand and fight back using whatever tools they can.

Some might say that what these AI generators are doing is no different than say collage art, or the work of pop artists like Andy Warhol, or even musicians that sample tracks from other songs. However, this is a situation that Stelladia said is completely different—namely because of the very nature of machine learning algorithms.

“The difference is the consciousness and will behind the meaning. That’s something AIs aren’t equipped to have right now,” Stelladia explained. “We all get influenced by our history, culture and admired figures. We constantly borrow things, learn from others… There is a meaning behind the references we use, the way we combine them in an artwork, which make them uniquely ours.”

“The algorithms are not artists,” Brett Karlan, an AI ethicist at the Institute of Human-Centered Artificial Intelligence at Stanford University, told The Daily Beast in an email. “They are not producing interesting commentaries on consumer culture by utilizing existing material culture in the way the (good) pop artists did. Instead, they are producers of uninspired and kitschy images.”

Karlan went on to explain that an AI generator is less like an artist and more like “a workshop that churns out copies of Thomas Kinkade paintings, if Thomas Kinkade painted junior-high-level Japanese cartoons or the worst kind of Caravaggio ripoff.” Beyond the plundering of art without the artists’ consent, Karlan pointed to a number of other major red flags when it comes to apps like Lensa and Time Machine—ones that are perennial problems associated with consumer AI products.

“These apps raise a number of important ethical concerns, some of which are unique to them and some of which are shared with wider concerns about AI image generation,” Karlan explained, adding that the potential of these products “generating nude images from pictures of children is a particularly horrifying instance of the worry about content moderation—something that engineers have been working harder to implement in their systems.”

That’s not just speculation either. Many users have reported Lensa generating nude and explicit images using pictures of underaged children. The fact that anyone with a phone and an internet connection can download and use the app only makes it easier for kids to start using the app.

However, Karlan said that the biggest concern he has is the fact that these AI image generators tend to homogenize all of the data received—resulting in a kind of “algorithmic monoculture.” So it’ll take photos and images of people from different cultures and standards of beauty and attempt to make them all the same “making everyone basically appear in a number of pretty basic styles.”

“In general, training on large data sets tends to treat statistically minoritized aspects of those datasets as aberrations to be smoothed over, not differences to be celebrated and preserved,” he said.

So while you can make a cool new profile photo for Instagram or TikTok with these image generators, remember that it comes at a great cost—one that might seem small to you, but actually matters quite a lot for the artists whose work is being used without their consent. This brings into question the methods in which we consume and produce art—and whether lines of code can make it.

“At the end of the day, art is a retelling of the world through one’s unique language,” Stelladia said. “AIs can, at least for now, reproduce a language well enough, but that doesn’t mean they understand what they are saying. I love how we all nurture collective images. Culture is alive and evolving and [humans] are part of this permanent process because of our genuine way of reinterpreting reality through meaning.”

Got a tip? Send it to The Daily Beast here

Get the Daily Beast's biggest scoops and scandals delivered right to your inbox. Sign up now.

Stay informed and gain unlimited access to the Daily Beast's unmatched reporting. Subscribe now.

Yahoo Movies

Yahoo Movies