Apple Intelligence is the company's new generative AI offering

On Monday at WWDC 2024, Apple unveiled Apple Intelligence, its long-awaited, ecosystem-wide push into generative AI. As earlier rumors suggested, the new feature is called Apple Intelligence (AI, get it?). The company promised the feature will be built with safety at its core, along with highly personalized experiences.

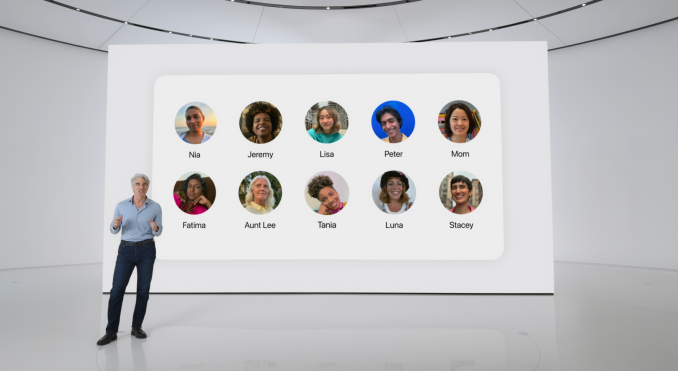

"Most importantly, it has to understand you and be grounded in your personal context, like your routine, your relationships, your communications, and more," CEO Tim Cook noted. "And of course, it has to be built with privacy from the ground up together. All of this goes beyond artificial intelligence. It’s personal intelligence, and it’s the next big step for Apple."

The company has been pushing the feature as integral to all of its various operating system offerings, including iOS, macOS and the latest, visionOS.

"It has to be powerful enough to help with the things that matter most to you," Cook said. "It has to be intuitive and easy to use. It has to be deeply integrated into your product experiences. Most importantly, it has to understand you and be grounded in your personal context, like your routine, your relationships, your communications and more and of course, it has to be built with privacy from the ground up. Together. All of this goes beyond artificial intelligence. It's personal intelligence, and it's the next big step for Apple."

SVP Craig Federighi added, "Apple Intelligence is grounded in your personal data and context." The feature will effectively build upon all of the personal data that users input into applications like Calendar and Maps.

The system is built on large language and intelligence models. Much of that processing is done locally according to the company, utilizes the latest version of Apple silicon. "Many of these models run entirely on device," Federighi claimed during the event.

That said, these consumer systems still have limitations. As such, some of the heavy lifting needs to be done off device in the cloud. Apple is adding Private Cloud Compute to the offering. The back end uses services that run Apple chips, in a bid to increase privacy for this highly personal data.

Apple Intelligence also includes what is likely the biggest update to Siri since it was announced more than a decade ago. The company says the feature is "more deeply integrated" into its operating systems. In the case of iOS, that means trading the familiar Siri icon for a blue glowing border that surrounds the desktop while in use.

Siri is no longer just a voice interface. Apple is also adding the ability to type queries directly into the system to access its generative AI-based intelligence. It's an acknowledgment that voice is often not the best interface for these systems.

App Intents, meanwhile, brings the ability to integrate the assistant more directly into different apps. That will start with first-party applications, but the company will also be opening up access to third parties. That addition will dramatically improve the kinds of things that Siri can do directly.

The offering will also open up multitasking in a profound way, allowing a kind of cross-app compatibility. That means, for instance, that users won't have to keep switching between Calendar, Mail and Maps in order to schedule meetings, for example.

Apple Intelligence will be integrated into most of the company's apps. That includes things like the ability to help compose messages inside Mail (along with third-party apps) or just utilize Smart Replies to respond. This is, notably, a feature Google has offered for some time now in Gmail and has continued to build out using its own generative AI model, Gemini.

The company is even bringing the feature to emojis with Genmoji (yep, that's the name). The feature uses a text field to build customized emojis. Image Playground, meanwhile, is an on-device image generator that is built into apps like Messages, Keynote, Pages and Freeform. Apple is also bringing a stand-alone Image Playground app to iOS and opening up access to the offering via an API.

Image Wand, meanwhile, is a new tool for Apple Pencil that lets users circle text to create an image. Effectively it's Apple's take on Google's Circle to Search, only focused on images.

Search has been built, as well, for content like photos and videos. The company promises more natural language searches inside of these applications. The GenAI models also make it easier to build slideshows inside of Photos, again using natural language prompts. Apple Intelligence will be rolling out to the latest versions of its operating systems, including iOS and iPadOS 18, macOS Sequoia and visionOS 2. It's available for free with those updates.

The feature will be coming to iPhone 15 Pro and M1 Mac and iPad devices. The standard iPhone 15 will not be getting the feature, likely owing to limitations of the chip.

As expected, Apple also announced a partnership with OpenAI that brings ChatGPT to offerings like Siri. The GPT 4.0-powered feature utilizes that company's image and text generation. The offering will give users access without having to sign up for an account or pay a fee (though they can still upgrade to premium).

That's arriving for iOS, iPadOS and macOS later this year. The company says it will also be bringing integration to other third-party LLMs, though didn't offer much detail. It seems likely that Google's Gemini is near the top of that list.

https://www.youtube.com/watch?v=Q_EYoV1kZWk

Yahoo Movies

Yahoo Movies